Difference between revisions of "QUonG initiative"

| (36 intermediate revisions by 5 users not shown) | |||

| Line 1: | Line 1: | ||

| − | ==The '''QUonG''' | + | __NOTOC__ |

| + | <div style="font-size:22px; font-weight: bold; line-height:26px; vertical-align:middle; padding-right:5px; padding-left:5px; padding-top:0px; padding-bottom:10px;border-width:3px; border-color:#1E90FF; border-style:solid"> | ||

| + | '''QUonG''' is [[image:Logo-infn.png|30px]] [http://www.infn.it INFN] initiative targeted at nurturing a hardware-software ecosystem centered on the subject of [http://en.wikipedia.org/wiki/Lattice_qcd Lattice QCD].<br> | ||

| + | Leveraging on the know-how the APE group acquired co-developing High Performance Computing systems dedicated (but not limited) to LQCD, '''QUonG''' aims at providing the scientific community a comprehensive platform for the development of LQCD multi-node parallel applications.</div> | ||

| + | [[File:Assemblequong.PNG|frame|right|500px|QUonG prototype and a detailed view of its elementary unit (sandwich) made of two Intel-based servers plus a NVIDIA S2050/70/90 multiple GPU system equipped with | ||

| + | the APEnet+ network board.]] | ||

| + | === The '''QUonG''' hardware === | ||

| + | The '''QUonG''' system is a massively parallel computing platform built | ||

| + | up as a cluster of hybrid elementary computing nodes. '''Every node is made of commodity Intel-based multi-core host processors, each coupled with a number of latest generation NVIDIA GPU's''', acting as floating point | ||

| + | accelerators. | ||

| − | [[ | + | '''The QUonG communication mesh is built upon our proprietary |

| − | [[ | + | component: the <span style="font-size:20px">[[APEnet+_project|APEnet+]]</span> network card.''' |

| + | Its design was | ||

| + | driven by the requirements of typical LQCD algorithms; this asked for a | ||

| + | point-to-point, high performance, low latency network where the | ||

| + | computing nodes are topologically arranged as vertexes of a 3 | ||

| + | dimensional torus. | ||

| + | |||

| + | Our reference system is a cluster of '''QUonG elementary computing units''', | ||

| + | each of which is a combination of multi-core CPU, GPU accelerator and an | ||

| + | <span style="font-size:20px; text-decoration:bold">[[APEnet+_project|APEnet+]]</span> card. | ||

| + | This approach makes for a flexible and modular platform that can be tailored | ||

| + | to different application requirements by tuning the GPU vs. CPU ratio per | ||

| + | unit. | ||

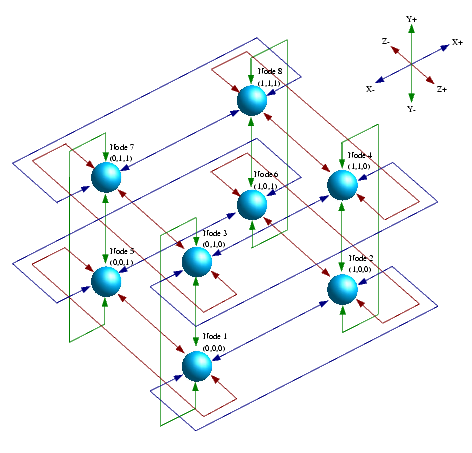

| + | [[Image:3d-mesh.png|left|frame|Schematic view of QUonG computing nodes connected by APEnet+ cards in a 3D mesh. ]] | ||

| + | === The '''QUonG''' Software Stack === | ||

| + | |||

| + | '''QUonG''' has a two-level hierarchy of parallelism: the first is the | ||

| + | parallelism of the many-cores GPU on one node, the second is the | ||

| + | multiplicity of nodes of the cluster assembly. | ||

| − | '''QUonG''' | + | For the many-cores parallelism, '''QUonG''' adopts the CUDA programming |

| − | + | model, providing a software library of LQCD-optimized data types and | |

| − | + | algorithms written in the C++ language; the multi-node parallelism is | |

| − | + | expressed using the SPMD paradigm of MPI. | |

| − | '''QUonG''' | + | === The '''QUonG''' Prototype === |

| − | + | The first prototype of the '''QUonG''' parallel system has been installed in the INFN facilities in Rome since the early months of 2012. | |

| − | + | The chosen ratio for this first | |

| − | + | deliverable is one (multi-core) host for two GPU's. | |

| − | + | ||

| − | + | As a consequence, the current target for the '''QUonG''' elementary | |

| + | mechanical assembly is a 3U "sandwich" made of two Intel-based | ||

| + | servers plus a NVIDIA S2050/70/90 multiple GPU system equipped with | ||

| + | the <span style="font-size:20px">[[APEnet+_project|APEnet+]]</span> network board. | ||

| − | The | + | The assembly collects two '''QUonG''' elementary computing units, each |

| + | made of one server hosting the interface board to control 2 (out of 4) | ||

| + | GPU's inside the S2050/70/90 blade and one <span style="font-size:20px">[[APEnet+_project|APEnet+]]</span> | ||

| + | board. In this way, a '''QUonG''' elementary computing unit is | ||

| + | topologically equivalent to two vertexes of the | ||

| + | <span style="font-size:20px">[[APEnet+_project|APEnet+]]</span> 3D mesh. | ||

| − | + | The current QUonG installation provides 32 TFlops of computing power. | |

| − | + | === The envisioned '''QUonG''' Rack === | |

| − | |||

| − | |||

Building from the configuration of the first '''QUonG''' prototype | Building from the configuration of the first '''QUonG''' prototype | ||

| − | equipped with | + | equipped with current NVIDIA Tesla GPU's with Fermi architecture, we |

envision a final shape for a deployed '''QUonG''' system as an assembly | envision a final shape for a deployed '''QUonG''' system as an assembly | ||

of fully populated standard 42U height '''QUonG''' racks, each one | of fully populated standard 42U height '''QUonG''' racks, each one | ||

| Line 33: | Line 66: | ||

A typical '''QUonG''' PFlops scale installation, composed of 16 | A typical '''QUonG''' PFlops scale installation, composed of 16 | ||

| − | '''QUonG''' racks interconnected via APEnet+ | + | '''QUonG''' racks interconnected via <span style="font-size:20px">[[APEnet+_project|APEnet+]]</span> 3D |

| − | be characterized by an estimated power consumption of the order of 400 | + | torus network, will be characterized by an estimated power consumption |

| − | kW and a cost ratio of the order of 5 kEuro/TFlops. | + | of the order of 400 kW and a cost ratio of the order of 5 kEuro/TFlops. |

| + | |||

| + | ---- | ||

| + | ---- | ||

| + | === QUonG Pubblications === | ||

| + | * R. Ammendola, A. Biagioni, O. Frezza, F. Lo Cicero, A. Lonardo, P. S. Paolucci, D. Rossetti, F. Simula, L. Tosoratto, P. Vicini, '''QUonG: A GPU-based HPC System Dedicated to LQCD Computing''', Symposium on Application Accelerators in High-Performance Computing, 2011 pp. 113-122, [http://doi.ieeecomputersociety.org/10.1109/SAAHPC.2011.15] | ||

| + | |||

| + | === QUonG Talks === | ||

| + | * R. Ammendola '''Tying GPUs together with APENet+''', Mini-Workshop CCR 2010, Napoli, 26 Gennaio 2010 [http://apegate.roma1.infn.it/~ammendola/talks/ccr_250110.pdf]. | ||

| + | |||

| + | * R. Ammendola '''Review on the GPU-related activities in INFN''', Workshop CCR 2010, Catania 18 Maggio 2010 [http://apegate.roma1.infn.it/~ammendola/talks/ccr_180510.pdf]. | ||

| + | |||

| + | === Scientific Results on QUonG === | ||

| + | * M. D’Elia, M. Mariti, '''Effect of Compactified Dimensions and Background Magnetic Fields on the Phase Structure of SU(N) Gauge Theories''', Phys. Rev. Lett. 118, 172001 – Published 24 April 2017 | ||

| + | |||

| + | * M. I. Berganza, L. Leuzzi, '''Criticality of the XY model in complex topologies''', arXiv:1211.3991 [cond-mat.stat-mech] [http://arxiv.org/abs/1211.3991]. | ||

| + | |||

| + | * M. D'Elia, M. Mariti and F. Negro, '''Susceptibility of the QCD vacuum to CP-odd electromagnetic background fields''', Phys. Rev. Lett. 110, 082002 (2013), arXiv:1209.0722 [hep-lat] [http://xxx.lanl.gov/abs/1209.0722]. | ||

| + | |||

| + | * F. Rossi, P. Londrillo, A. Sgattoni, S. Sinigardi, G. Turchetti, '''Robust algorithms for current deposition and efficient memory usage in a GPU Particle In Cell code''', 15th Advanced Accelerator Concepts Workshop (AAC 2012), [[File:Aac.pdf]] | ||

| + | |||

| + | |||

| + | [[File:Monolite-scaled.jpg|center|500px|QUonG prototype]] | ||

Latest revision as of 16:18, 6 October 2017

QUonG is ![]() INFN initiative targeted at nurturing a hardware-software ecosystem centered on the subject of Lattice QCD.

INFN initiative targeted at nurturing a hardware-software ecosystem centered on the subject of Lattice QCD.

The QUonG hardware

The QUonG system is a massively parallel computing platform built up as a cluster of hybrid elementary computing nodes. Every node is made of commodity Intel-based multi-core host processors, each coupled with a number of latest generation NVIDIA GPU's, acting as floating point accelerators.

The QUonG communication mesh is built upon our proprietary component: the APEnet+ network card. Its design was driven by the requirements of typical LQCD algorithms; this asked for a point-to-point, high performance, low latency network where the computing nodes are topologically arranged as vertexes of a 3 dimensional torus.

Our reference system is a cluster of QUonG elementary computing units, each of which is a combination of multi-core CPU, GPU accelerator and an APEnet+ card. This approach makes for a flexible and modular platform that can be tailored to different application requirements by tuning the GPU vs. CPU ratio per unit.

The QUonG Software Stack

QUonG has a two-level hierarchy of parallelism: the first is the parallelism of the many-cores GPU on one node, the second is the multiplicity of nodes of the cluster assembly.

For the many-cores parallelism, QUonG adopts the CUDA programming model, providing a software library of LQCD-optimized data types and algorithms written in the C++ language; the multi-node parallelism is expressed using the SPMD paradigm of MPI.

The QUonG Prototype

The first prototype of the QUonG parallel system has been installed in the INFN facilities in Rome since the early months of 2012. The chosen ratio for this first deliverable is one (multi-core) host for two GPU's.

As a consequence, the current target for the QUonG elementary mechanical assembly is a 3U "sandwich" made of two Intel-based servers plus a NVIDIA S2050/70/90 multiple GPU system equipped with the APEnet+ network board.

The assembly collects two QUonG elementary computing units, each made of one server hosting the interface board to control 2 (out of 4) GPU's inside the S2050/70/90 blade and one APEnet+ board. In this way, a QUonG elementary computing unit is topologically equivalent to two vertexes of the APEnet+ 3D mesh.

The current QUonG installation provides 32 TFlops of computing power.

The envisioned QUonG Rack

Building from the configuration of the first QUonG prototype equipped with current NVIDIA Tesla GPU's with Fermi architecture, we envision a final shape for a deployed QUonG system as an assembly of fully populated standard 42U height QUonG racks, each one capable of 60 TFlops/rack in single precision (30 TFlops/rack in double precision) of peak performance, at a cost of 5kEuro/TFlops and for an estimated power consumption of 25 kW/rack.

A typical QUonG PFlops scale installation, composed of 16 QUonG racks interconnected via APEnet+ 3D torus network, will be characterized by an estimated power consumption of the order of 400 kW and a cost ratio of the order of 5 kEuro/TFlops.

QUonG Pubblications

- R. Ammendola, A. Biagioni, O. Frezza, F. Lo Cicero, A. Lonardo, P. S. Paolucci, D. Rossetti, F. Simula, L. Tosoratto, P. Vicini, QUonG: A GPU-based HPC System Dedicated to LQCD Computing, Symposium on Application Accelerators in High-Performance Computing, 2011 pp. 113-122, [1]

QUonG Talks

- R. Ammendola Tying GPUs together with APENet+, Mini-Workshop CCR 2010, Napoli, 26 Gennaio 2010 [2].

- R. Ammendola Review on the GPU-related activities in INFN, Workshop CCR 2010, Catania 18 Maggio 2010 [3].

Scientific Results on QUonG

- M. D’Elia, M. Mariti, Effect of Compactified Dimensions and Background Magnetic Fields on the Phase Structure of SU(N) Gauge Theories, Phys. Rev. Lett. 118, 172001 – Published 24 April 2017

- M. I. Berganza, L. Leuzzi, Criticality of the XY model in complex topologies, arXiv:1211.3991 [cond-mat.stat-mech] [4].

- M. D'Elia, M. Mariti and F. Negro, Susceptibility of the QCD vacuum to CP-odd electromagnetic background fields, Phys. Rev. Lett. 110, 082002 (2013), arXiv:1209.0722 [hep-lat] [5].

- F. Rossi, P. Londrillo, A. Sgattoni, S. Sinigardi, G. Turchetti, Robust algorithms for current deposition and efficient memory usage in a GPU Particle In Cell code, 15th Advanced Accelerator Concepts Workshop (AAC 2012), File:Aac.pdf