Difference between revisions of "APEnet+ project"

| Line 43: | Line 43: | ||

* Direct access to GPU memory using PCI express peer-to-peer (NVidia Fermi GPUs only). | * Direct access to GPU memory using PCI express peer-to-peer (NVidia Fermi GPUs only). | ||

| − | + | <br> | |

| + | |||

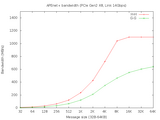

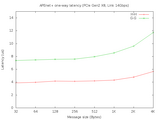

| + | <gallery widths=300px caption="APEnet+ 3 links test board, one-way bandwidth test, host-to-host and GPU-to-GPU, link speed cap to 14Gbps."> | ||

| + | File:apenet_bw_logx.png | one-way bandwidth test | ||

| + | File:apenet_lat_logx.png | one-way latency test | ||

| + | </gallery> | ||

=== GPU Cluster installation === | === GPU Cluster installation === | ||

Revision as of 15:19, 7 February 2012

APEnet+ is the new generation of our 3D network adapters for PC clusters.

Project Background

Many scientific computations need multi-node parallelism for matching up both space (memory) and time (speed) ever-increasing requirements. The use of GPUs as accelerators introduces yet another level of complexity for the programmer and may potentially result in large overheads due to bookkeeping of memory buffers. Additionally, top-notch problems may easily employ more than a PetaFlops of sustained computing power, requiring thousands of GPUs orchestrated via some parallel programming model, mainly Message Passing Interface (MPI).

- Pictures of APEnet boards

APEnet+ aim and features

The project target is the development of a low latency, high bandwidth direct network, supporting state-of-the-art wire speeds and PCIe X8 gen2 while improving the price/performance ratio on scaling the cluster size. The network interface provides hardware support for the RDMA programming model. A Linux kernel driver, a set of low-level RDMA APIs and an OpenMPI library driver are available; this allows for painless porting of standard applications.

Highlights

- APEnet+ is a packet-based direct network of point-to-point links with 2D/3D toroidal topology.

- Packets have a fixed size envelope (header+footer) and are auto-routed to their final destinations according to wormhole dimension-ordered static routing, with dead-lock avoidance.

- Error detection is implemented via CRC at packet level.

- Basic RDMA capabilities, PUT and GET, are implemented at the firmware level.

- Fault-tolerance features (will be added from 2011).

- Direct access to GPU memory using PCI express peer-to-peer (NVidia Fermi GPUs only).

- APEnet+ 3 links test board, one-way bandwidth test, host-to-host and GPU-to-GPU, link speed cap to 14Gbps.

GPU Cluster installation

- Where: APE lab (INFN Roma 1)

- What: GPUcluster

APEnet+ Public Documentation

Internal links (require login):

APEnet+ HW, APEnet+ SW, APEnet+ specification, Next Deadlines For Pubblication