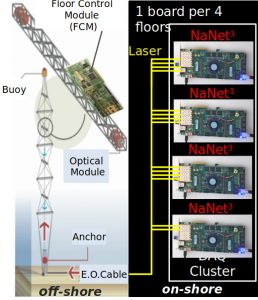

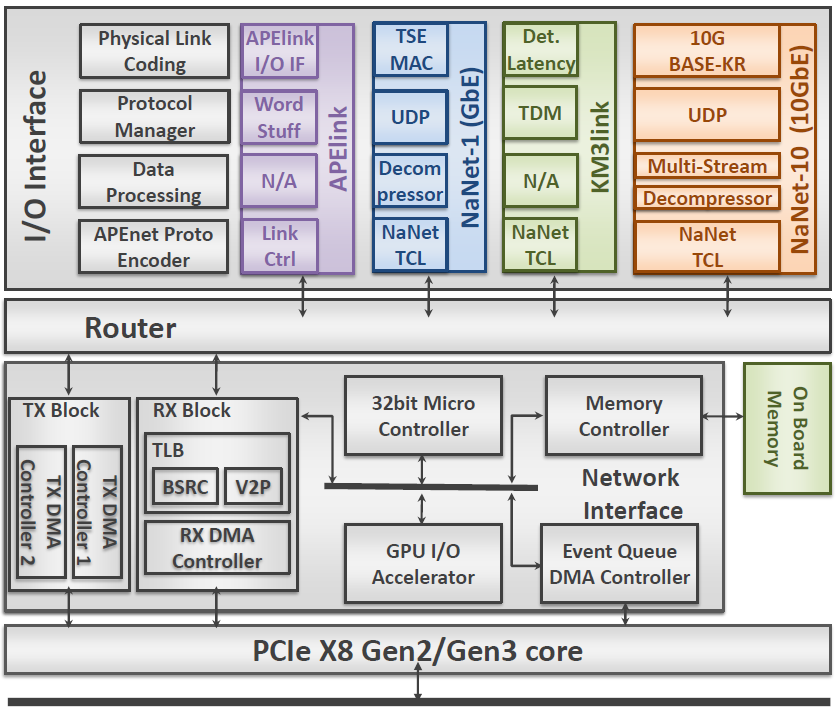

The NaNet project goal is the design and implementation of a family of FPGA-based PCIe Network Interface Cards for High Energy Physics to bridge the front-end electronics and the software trigger computing nodes.

The design supports both standard and custom channels: GbE (1000BASE-T), 10GbE (10Base-KR),40GbE, APElink (custom 34 Gbps link dedicated to HPC systems), KM3link (deterministic latency 2.5Gbps link used in the KM3Net-IT experiment data acquisition system).

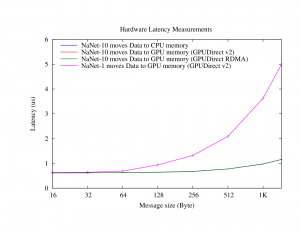

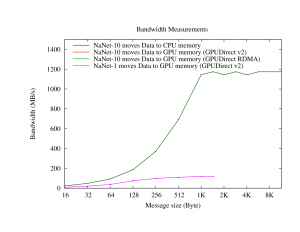

The RDMA feature combined with of a transport protocol layer offload module and a data stream processing stage makes NaNet a low-latency NIC suitable for online processing of data streams.

NaNet GPUDirect/RDMA capability enables the connected processing system to exploit the high computing performances of modern GPUs on real-time applications.

It performs a 4-stages processing on the data stream: following the OSI Model, the Physical Link Coding stage implements, as the name suggests, the channel physical layer (e.g. 1000BASE-T) while the Protocol Manager stage handles, depending on the kind of channel, data/network/transport layers (e.g. Time Division Multiplexing or UDP); the Data Processing stage implements application dependent transformations on data streams (e.g. performing compression/decompression) while the APEnet Protocol Encoder performs protocol adaptation, encapsulating inbound payload data in APElink packet protocol, used in the inner NaNet logic, and decapsulating outbound APElink packets before re-encapsulating their payload in output channel transport protocol (e.g. UDP).

It supports a configurable number of ports implementing a full crossbar switch responsible for data routing and dispatch. Number and bit-width of the switch ports and the routing algorithm can each be defined by the user to automatically achieve a desired configuration. The Router block dynamically interconnects the ports and comprises a fully connected switch, plus routing and arbitration blocks managing multiple data flows @2.8 GB/s

The block acts on the transmitting side by gathering data coming in from the PCIe port and forwarding them to the Router destination ports while on the receiving side it provides support for RDMA in communications involving both the host and the GPU (via the dedicated GPU I/O Accelerator module)

The module is built upon a powerful commercial core from PLDA that sports a simplified but efficient backend interface and multiple DMA engines.

| Year | |||

| Device Family | Development Kit | Terasic DE5 | Terasic DE5 |

| Channel Technology | |||

| Trasmission Protocol | |||

| Number of channel | |||

| PCIe | |||

| nVIDIA GPUDirect RDMA | |||

| Real-time Processing | |||

| HEP experiment |

NaNet Software Stack

Software components for NaNet operation are needed both on the x86 host and on the Nios II FPGA-embedded μcontroller. On the x86 host, a GNU/Linux kernel driver and an application library are present.

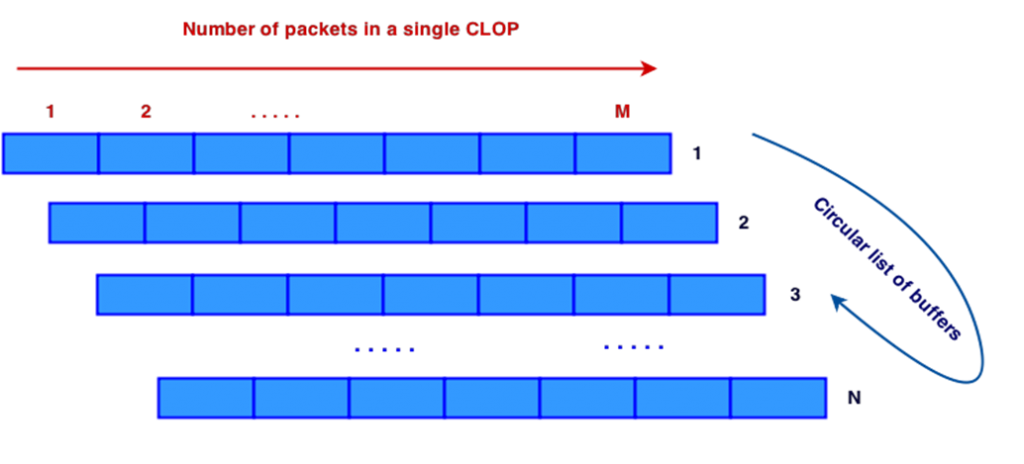

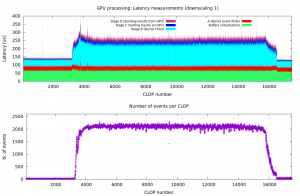

The application library provides an API mainly for open/close device operations, registration (i.e. allocation, pinning and returning of virtual addresses of buffers to the application) and deregistration of circular lists of persistent receiving buffers (CLOPs) in GPU and/or host memory and signalling of receive events on these registered buffers to the application (e.g. to invoke a GPU kernel to process data just received in GPU memory).

On the μcontroller, a single process application is in charge of device configuration, generation of the destination virtual address inside the CLOP for incoming packets payload and virtual to physical memory address translation performed before the PCIe DMA transaction to the destination buffer takes place.

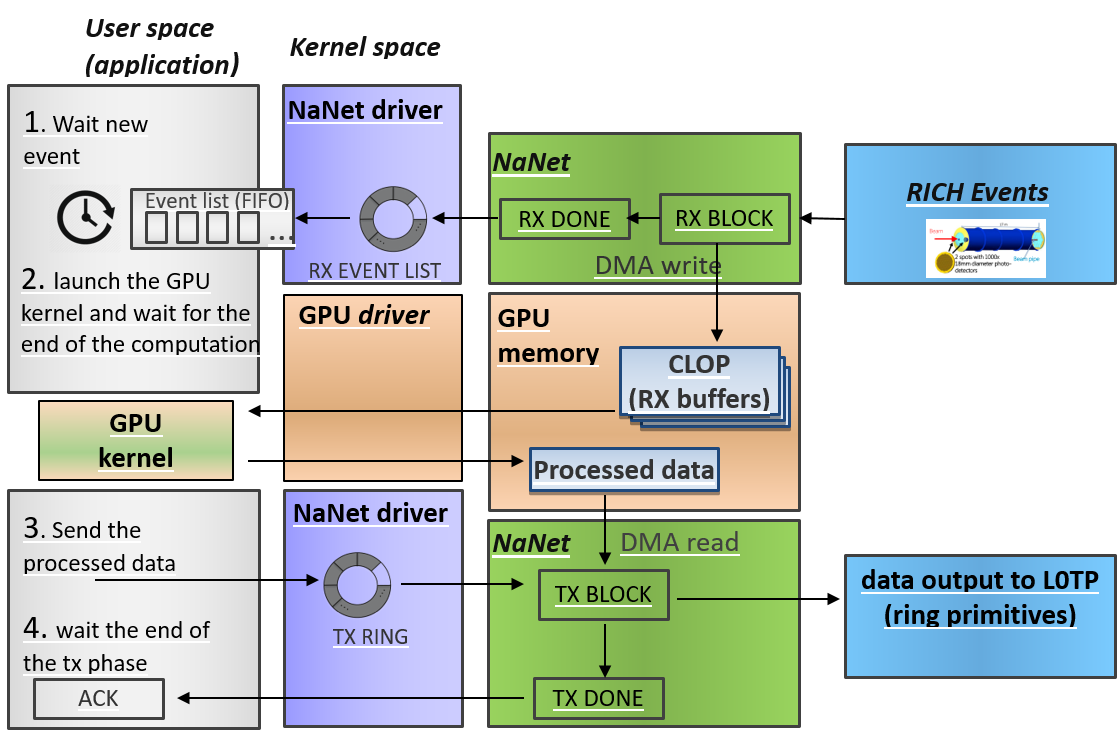

The control flow of processes through kernel and user space are detailed below:

- NaNet NIC DMA-writes a “receiving done” event in a memory region called “event queue” trapped by a kernel-space device driver notified to the user application which launches a CUDA kernel to process the data using the GPU;

- Results of the processing is eventually sent via NaNet board to the network:

data are DMA-read directly from GPU memory; - the kernel device driver (invoked by the user application on HOST) instructs the NIC by filling a “descriptor” into a dedicated, DMA-accessible memory region called “TX ring”;

- the presence of new descriptors is notified to NaNet by writing on a doorbell register over PCIe;

NaNet NIC issues a “tx done” completion event in the “event queue”.